Categories

Archives

- September 2024

- August 2024

- July 2024

- June 2024

- April 2024

- March 2024

- January 2024

- December 2023

- October 2023

- September 2023

- August 2023

- July 2023

- May 2023

- April 2023

- February 2023

- January 2023

- November 2022

- October 2022

- September 2022

- July 2022

- May 2022

- April 2022

- February 2022

- January 2022

- December 2021

- November 2021

- September 2021

- August 2021

- July 2021

One of the goals of cloud computing is to provide cost-effective solutions that are dynamic and reliable. In Chapter 7, Designing Compute Solutions, we looked at several different components: containers, Kubernetes, Azure Functions, and Logic Apps.

One of the key differences between these services and more traditional compute options, such as VMs, is the ability to scale the resources they use up and down, dynamically – that is, in response to demand.

This scaling ability is most effectively used when combined with the microservice pattern of development. Understanding microservices helps to review the problems associated with applications that don’t use them.

A typical solution comprises a user interface, a backend database or storage mechanism, and some business logic in between. Each of these components has to run on some form of computer, which could be a VM. Using N-tier architectures, we break these components up so that each tier can run on its own VM, which allows us to configure those VMs according to the demand placed on them. For example, the user interface may not need much power at all, the business tier may need lots of CPU to perform complex calculations, and the storage may want more RAM to better cache data.

However, even with N-tier, the relevant process is still running on one or more VMs with a static amount of RAM and CPU configured. The application may have varying requirements depending on how much it is being used at any given moment. For example, an internal business application will have high usage during working hours but not outside working hours.

Hosting applications must accommodate the peak usage times, meaning that the compute and RAM resources are wasted during off-peak times.

Microservices seek to address this problem by using technologies that can dynamically expand and contract the resources they need in response to the demand being placed on them.

To help achieve this, hosting technology should expand out rather than up. This means that more copies of the instance running the service should be created, rather than adding more RAM and CPU to an individual service.

To get the most significant benefit from this pattern and ensure that the most efficient number of resources are used at any one time, each instance needs to use as few resources as possible. In other words, rather than a service that requires a minimum of two CPUs and 4 GB of RAM, services should seek to use a fraction of CPU and memory in the KB range. In this way, resources can be managed in a more granular, more efficient, and cost-effective manner.

If processes are to use as few resources as possible, they must be smaller, which means the functions they perform should be discrete and specific.

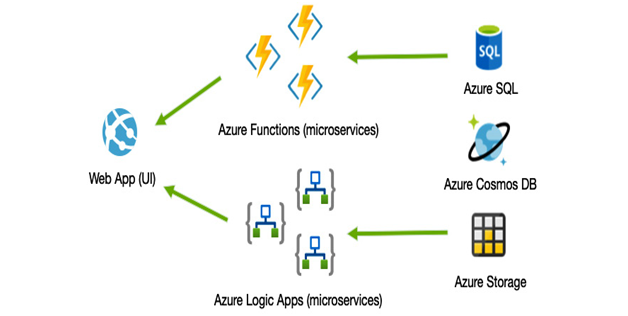

Thus, microservice architectures ensure individual services are built to be small and fast. The minimum resources can be allocated where required when needed, as depicted in the following diagram:

Figure 11.5 – Microservice-based architecture example

In Azure, there are many options for helping with this, and in Chapter 7, Designing Compute Solutions, we saw how containerization and orchestration technologies could help with this. However, they still require a minimal amount of compute to be running at all times.

Serverless technologies, such as Azure Functions and Azure Logic Apps, provide the most flexible and cost-effective options as they can be billed on a per-execution basis, meaning you only ever pay for the service when it is running.

From an architectural perspective, and for the AZ-304 exam, the key point is that serverless components such as Azure Functions and Logic Apps are ideally suited to microservice solutions as they automatically scale in response to demand; however, to get the most benefit out of it, code must be built with the microservice pattern in mind.

Another feature of Azure Logic Apps and Azure Functions is that they are event-driven, meaning that the logic is called in demand to events happening elsewhere – either in response to a user clicking a button or another service triggering them. The Azure platform uses events throughout many of its components, allowing you to set triggers for various actions on many services.

For example, when a file is uploaded to a Blob storage container, it triggers an event that can be hooked into using Azure Functions or Logic Apps to perform another action. This event-driven nature, combined with the fact that actions should be fast and responsive, leads to other best-employed patterns, such as messaging and events.

Leave a Reply